Why CMOs Need to Understand What’s Under the Hood

AI is no longer a shiny object or side experiment. It is fast becoming the foundation of how modern marketing teams operate. From strategy to production to promotion to analytics, AI is being embedded everywhere. In fact, 85% of marketers are using AI for content creation, with other use cases rapidly increasing in popularity. And a foundational element aside from “which tool are you using?” is “what’s powering that tool?”

Large Language Models (LLMs) are the engines behind generative AI tools like ChatGPT, Claude, Gemini, and countless others. And while CMOs don’t need to become AI engineers, they absolutely need to understand the capabilities, limitations, and strategic implications of these models.

This post breaks down the landscape of leading AI models, connects them to real marketing use cases, and lays out what CMOs must do to structure their teams, plan responsibly, and measure the true business impact of AI.

What Are AI Models, Really? (And Why Marketers Should Care)

At their core, large language models (LLMs) are trained systems capable of generating human-like text based on massive amounts of data. They’re the brains behind many of the AI-driven tools your teams already use. For example, an LLM is the core technology that powers ChatGPT. Specifically, ChatGPT uses a Generative Pre-Trained Transformer (GPT), which is a type of LLM, to generate text and engage in conversations. In fact, half of Americans now use AI LLMs according to a new national survey by Elon University’s Imagining the Digital Future Center, March 2025. (source)

These models are built using both structured and unstructured data pulled from across the Internet — including sources like academic content, websites, and, depending on the model, user-generated platforms such as Reddit and other public forums. This allows them to synthesize not only factual information but also qualitative opinion signals that can reflect how people talk, debate, and express preferences in the real world. (see “Reddit sues Anthropic for allegedly not paying for training data”)

But not all models are the same.

Different models have different strengths: some excel at longform content, others at search or summarization. Some are open-source and customizable; others are closed, safer, but less flexible. Knowing the difference impacts how you brief your team, allocate your budget, and govern your brand voice.

It also helps you avoid buying different tools that are powered by the same underlying model, or worse, making strategic decisions based on generic outputs.

Meet the Core Players: Models Behind the Marketing Tools

| Vendor | Sample Models | Known For | Why Marketers Should Care |

| OpenAI | GPT-4, GPT-4o | Versatile, plugin-ready | Powers ChatGPT, Jasper, Copy.ai, Notion |

| Anthropic | Claude 3 | Long context window, safety | Better for longform, fact-rich content |

| Gemini 1.5 | Search-native, multi-modal | Excellent for SEO, ads, Google ecosystem | |

| Meta | LLaMA 3 | Open-source, dev-flexible | Great for brand-safe internal tools |

| Mistral | Mixtral | Lightweight, fast, open | Ideal for custom lightweight workflows |

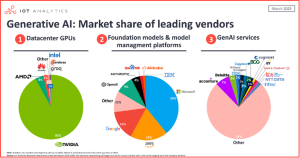

While many tools market themselves differently, they often sit on top of one of these core models. Recent market data from IoT Analytics (March 2025) highlights the growing dominance of vendors like Microsoft, OpenAI, and Google in the foundational model landscape—context that can help CMOs navigate vendor claims and understand the true distribution of influence across the AI ecosystem. Understanding what’s under the hood helps you make smarter build vs. buy decisions, assess risks, and align capabilities with your team’s strengths.

Source: IOT Analytics, The leading generative AI companies, March 2025

Beyond the Models: Application and API Layer Tools You Already Use

Many marketers don’t interact with LLMs directly. Instead, they use applications that leverage LLMs, for example:

- Generative copy and long-form content: Jasper, Copy.ai, Grammarly, Anyword, Writesonic

- Personalization and journey orchestration: Dynamic Yield, Braze, Adobe Target

- Presentations: Gamma, Canva, Beautiful.ai

- Predictive analytics and ABM intent: Informa TechTarget Priority Engine, 6Sense, Demandbase

- AI video and rich media production: HeyGen (see my AI video synthesis here), Synthesia, Lumen5

These tools are essentially wrappers on top of models like GPT-4, Claude, or Gemini. The experience and outputs depend heavily on the UI, prompt design, and fine-tuning decisions made by the vendor.

At the infrastructure level, developers and marketing ops teams may also leverage:

- Hugging Face (model hosting)

- LangChain (agent orchestration)

- Azure OpenAI / AWS Bedrock (managed access to models)

CMOs should ensure marketing leadership understands the stack beneath the apps they approve. Why? Because switching tools may not actually give you new capabilities—just different interfaces over the same core brain.

Choosing the Right Model(s) for Your Marketing Use Cases

Model strengths, weaknesses, and compatibility factors are evolving quickly. The recommendations below reflect leading capabilities at a point in time—but expect shifts as models update, expand their context windows, or introduce new modalities (e.g., image, video, audio). CMOs and their teams should treat model selection as a dynamic process, revisiting decisions regularly to adapt to performance improvements and strategic needs. For ongoing comparisons, sources like LMArena (formerly Chatbot Arena) and Hugging Face’s Open LLM Leaderboard offer helpful benchmarking and evaluation data across major models.

Different models are optimized for different tasks. Here’s how to match them:

- GPT-4o – Best all-purpose model for short- to medium-form content, quick ideation, and third-party plugin use

- Claude 3 – Best for long documents, safety-sensitive content, and knowledge work

- Gemini – Best for SEO, ads, and integration with Google products

- LLaMA / Mistral – Best for internal custom tools and experimentation

CMOs should guide teams to evaluate models based on:

- Accuracy and hallucination risk

- Tone and brand safety

- Context window (how much the model can “remember”)

- Cost structure and licensing

- Ecosystem compatibility

Ask vendors what model powers their tool. Don’t assume you’re getting differentiated performance just because the UI is slick.

Most importantly, regardless of the chosen model and application, CMOs should promote an AI-forward philosophy—one where AI enhances productivity, creativity, and business performance without displacing critical human judgment, vision or brand stewardship.

Strategic Plays CMOs Can Make Now

To operationalize AI, CMOs need more than ideas—they need structure. Consider the following action items:

Appoint an AI Lead or Director

Assign someone to own AI enablement across the marketing function. They don’t need to be a data scientist, but they should:

- Evaluate new tools and vendors

- Own training documentation and team onboarding

- Interface with IT, Legal, and RevOps (and hopefully an AI Task Force existing within your organization)

Just to be clear, this individual is meant to help facilitate AI adoption and leverage—not to be the only person using AI. Everyone on the team should be experimenting with AI and incorporating it into their workflows to drive efficiency, creativity and innovation.

Stand Up an AI Task Force

Form a cross-functional team from content, digital, demand gen, and marketing ops. They meet often to:

- Test tools

- Share best practices

- Review ethical guidelines and usage policy

- Gauge AI-driven success

- Identify opportunities for experimentation and scale

Create Role-Based AI Enablement

Train content teams on prompt libraries. Train digital marketers on chat-based analytics. Build onboarding modules tied to real tasks, not generic prompt theory.

Prioritize Revenue-Driving Use Cases

Focus your initial AI investments on high-impact areas that directly contribute to pipeline, conversions, and customer retention. Prioritize use cases like personalized outbound messaging, lead scoring, predictive content performance, and automated reporting and assessment that frees up resources for strategic work.

Establish a Culture of Experimentation

Encourage pilots and internal experiments, but tie them to measurable goals and create pathways for scaling AI:

- Productivity (e.g., reduced content production time)

- Innovation (e.g., new campaign formats only possible with AI)

- Business impact (e.g., increase in conversion or MQL quality)

Launch an AI Playbook

Include:

- Purpose and scope of AI use in marketing

- Guidelines for compliance, brand safety, and data usage

- Clear roles and responsibilities for governance and oversight

- Expectations for cross-functional collaboration with Legal, Security, and IT

- Approved tools and models

- Governance guidelines

- Usage tiers (internal use, customer-facing use)

- Security and brand guardrails

Looking Ahead: From Static Tools to Intelligent Agents

We’re quickly moving from AI as a tool to AI as a co-worker.

New platforms support “agents” — models that can:

- Retrieve data from your CRM

- Write and schedule emails

- A/B test copy and update landing pages

This shift demands operational planning. CMOs need to:

- Identify workflows that can be partially or fully automated

- Define where humans still add the most value (e.g., narrative, strategy)

- Collaborate with Marketing Ops and IT to build secure, functional agents

In short: LLMs will move from content creation to campaign execution. Get ready now.

Key Takeaways for Marketing Leaders

- Structure your team to use and scale AI, not just experiment with it.

- Appoint owners

- Create documentation

- Train by role

- Invest in the right talent and skills, including the following activities as cited by a 2025 CMO survey by BCG:

- Assessing GenAI skills for talent planning

- Cross-functional pods for GenAI

- Identifying GenAI super users

- Upskilling all employees

- Move from tool-chasing to capability-building

- Ask what model powers your tools

- Focus on tasks, not brands

- Build a culture of experimentation with guardrails

- Let teams test, but require them to tie usage to outcomes

- Reward speed and innovation, but track adoption and risk

- Operationalize your AI learning cycle

- Regular AI reviews (wins, fails, insights)

- Shared playbooks and prompt libraries

- Quarterly stack assessments

- Track the right metrics

- AI tool usage per role

- Time saved on repeatable tasks

- Impact on core KPIs (pipeline, CTR, content velocity)

The future of marketing isn’t about who has the flashiest tools. It’s about who understands the intelligence behind them—and who can integrate and harness that intelligence into high-performing teams that drive innovation and organizational impact.